There is a lot of research into utilizing artificial intelligence (AI) in healthcare. Although this is a good thing, most of this research is focused on creating the algorithms. There is not enough high quality research that assesses real world clinical implementation, and the studies that do exist are not always easy to properly compare. To improve this, DECIDE-AI was published in May 20221. This is a reporting guideline for early-stage clinical evaluation of decision support systems driven by artificial intelligence. These important guidelines will allow more thorough evaluation and replicability of scientific studies to prove the effectiveness of AI based decision support systems.

In this article, we explain why this is important, and how this will actually lead to improved implementation into clinical practice.

What are reporting guidelines exactly?

Reporting guidelines include a list of minimally required information to be presented in a scientific paper and therefore, such guidelines will allow the researcher to structure their work. Well reported research will enable readers to better understand the research, whether the reader is an editor, peer-reviewer or a physician trying to make clinical decisions. Furthermore, it will facilitate replicability by other researchers, as well as allow inclusion of the work in systematic reviews. Without such structured reporting, it will be hard for anyone to evaluate quality of the research, judge the possibility for clinical implementation, and compare metrics like diagnostic accuracy. This is true for both studies and clinical products.

What reporting guidelines exist already for AI?

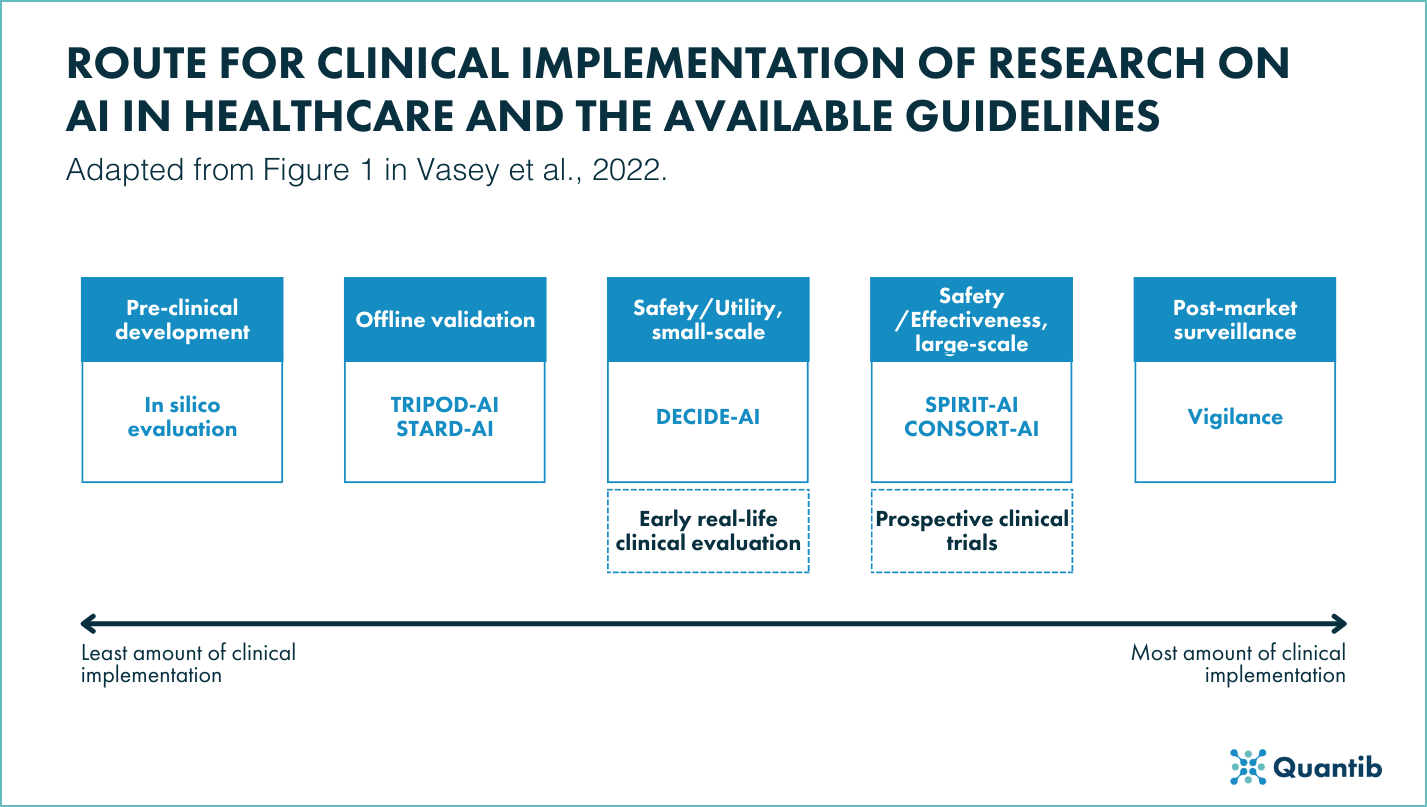

There are reporting guidelines for many different study designs, containing checklists of the points that the manuscript should, at least, contain.

Guidelines for AI algorithm development and validation

Within the field of AI algorithm development and validation, existing guidelines are being adapted to create STARD-AI 2 and TRIPOD-AI 3 guidelines.

Figure 1: Summarized overview of the available reporting guidelines for clinical implementation research on AI in healthcare1.

STARD-AI guidelines

STARD-AI is an adaptation of the widely used STARD guideline4 for diagnostic accuracy studies, to address AI specific challenges. For example, those concerning the definition of validation datasets, sensitivity, and positive as well as negative predictive values.

TRIPOD-AI guidelines

The TRIPOD guidelines5 are specific for the reporting of multivariable clinical prediction models in healthcare, and the TRIPOD-AI will create methodological standards for fair and neutral evaluations with machine learning, while improving interpretation and terminology.

Guidelines for AI in large-scale clinical trials

On the other end of the evaluation spectrum, where large-scale safety and effectiveness studies are performed there are more reporting guidelines available. The SPIRIT-AI6 guidelines focus on reporting of the clinical trial protocols, while CONSORT-AI7 focusses on the result-reporting of high quality AI trials. Both these guidelines come from the same initiative and include extra items on AI interventions compared to their non-AI counterparts8,9. These focus on important things like the skill required for use, the integration setting, and human-AI interaction.

DECIDE-AI guidelines

Between these two very important stages of the studies of AI in clinical healthcare, namely the creation and initial validation of algorithms and the large scale clinical trials, there is a large array of studies that should show the actual clinical use. Until DECIDE-AI, no real guidelines existed for this difficult, but important step of translating research into clinical practice and implementing it into patient-care.

This stage will allow the assessment of the actual impact of an algorithm on user-decisions together with the relevance of these (changes in) decisions. It will furthermore allow to test safety when used to influence human decision-making. As the DECIDE-AI steering group states in their 2020 paper, the relatively new field of AI should learn from mistakes of others. For example, disasters in early drug trials (Thalidomide being an example 10) showed the need for proper testing of safety and use cases. This should be done with AI in healthcare as well.

Another very important reason for these clinical studies is that it allows to evaluate, improve, and implement modifications to the product and its usability. Such clinical studies will allow exploration of what features or integrations with other parts within the patient care trajectory will bring further efficiency and improvements in healthcare. This can be as ‘simple’ as an integration with a PACS, or other related system, or more extensive as an implementation a new functionality in the product. Such product changes based on clinical feedback is something that’s not possible during large-scale trials. Finally, large scale trials are difficult and expensive. These can only be planned once smaller prospective trials have given indication of expected effect size, inclusion criteria, and information about the when, where, and how to implement the AI.

As the authors of the DECIDE-AI steering group have mentioned11: “Transparent reporting on these aspects will not only avoid preventable harm and research waste but also play a key role in transforming AI from a promising technology to an evidence-based component of modern medicine”

Dive deeper into AI in radiology with our Ultimate Guide.

Are these guidelines only interesting for research(ers)?

It is true that the guidelines are made by and for researchers to report on their scientific studies. However, this does not mean that their scope cannot and does not reach further. Clinicians aware of these guidelines will be better able to compare AI in healthcare products and decide which one is best for their clinical setting, their patient population, and their use case. Those trying to bring their AI algorithms into clinical practice should be aware of these guidelines in order to properly explain the validation process of their products internally as well as externally.

Conclusion

The DECIDE-AI reporting guidelines bring a standardized aid to the clinical implementation of AI algorithms. We hope these guidelines will encourage scientific research, with a great potential to improve patient care, to reach farther into clinical implementation, in a way it hasn’t been done to date.

Discover all the available research published that involves Quantib products.

Bibliography

- Vasey, B. et al. artificial intelligence : DECIDE-AI. 28, (2022).

- Sounderajah, V. et al. Developing a reporting guideline for artificial intelligence-centred diagnostic test accuracy studies: The STARD-AI protocol. BMJ Open 11, 1–7 (2021).

- Collins, G. S. & Moons, K. G. M. Reporting of artificial intelligence prediction models. Lancet 393, 1577–1579 (2019).

- STARD 2015: An Updated List of Essential Items for Reporting Diagnostic Accuracy Studies | The EQUATOR Network. https://www.equator-network.org/reporting-guidelines/stard/.

- Tripod statement. https://www.tripod-statement.org/.

- Rivera, S. C., Liu, X., Chan, A.-W., Denniston, A. K. & Calvert, M. J. Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI Extension. BMJ (2020) doi:10.1136/bmj.m3210.

- Liu, X., Rivera, S. C., Moher, D., Calvert, M. J. & Denniston, A. K. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI Extension. BMJ 370, (2020).

- CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials | The EQUATOR Network. https://www.equator-network.org/reporting-guidelines/consort/.

- SPIRIT 2013 Statement: Defining standard protocol items for clinical trials | The EQUATOR Network. https://www.equator-network.org/reporting-guidelines/spirit-2013-statement-defining-standard-protocol-items-for-clinical-trials/.

- Thalidomide | Science Museum. https://www.sciencemuseum.org.uk/objects-and-stories/medicine/thalidomide.

- Vasey, B. et al. DECIDE-AI: new reporting guidelines to bridge the development-to-implementation gap in clinical artificial intelligence. Nat. Med. 27, 186–187 (2021).